Summary of Main Findings

- Inbound-balanced sample performed better on point estimates of available benchmarks

- Outbound-balanced sample performed better on all tests of concurrent validity

- Despite strict quotas, inbound sample required weighting to produce better estimates

- Rim weights improved estimates of many socio-economic attributes BUT not technology device ownership

Nonprobability-based web panels often draw samples based on U.S. Census demographic targets in an attempt to improve sample representativeness of the general population.

We compare two approaches to building census-balanced samples: (a) outbound-balancing whereby email invitations are fielded to a sample of web panelists whose profile demographics match census targets, and the completed sample is further adjusted with post-stratification weights; vs. (b) inbound-balancing whereby quota screeners are applied when web panelists start the survey such that the completed sample will closely match census targets; and minimal post-hoc weighting is needed.

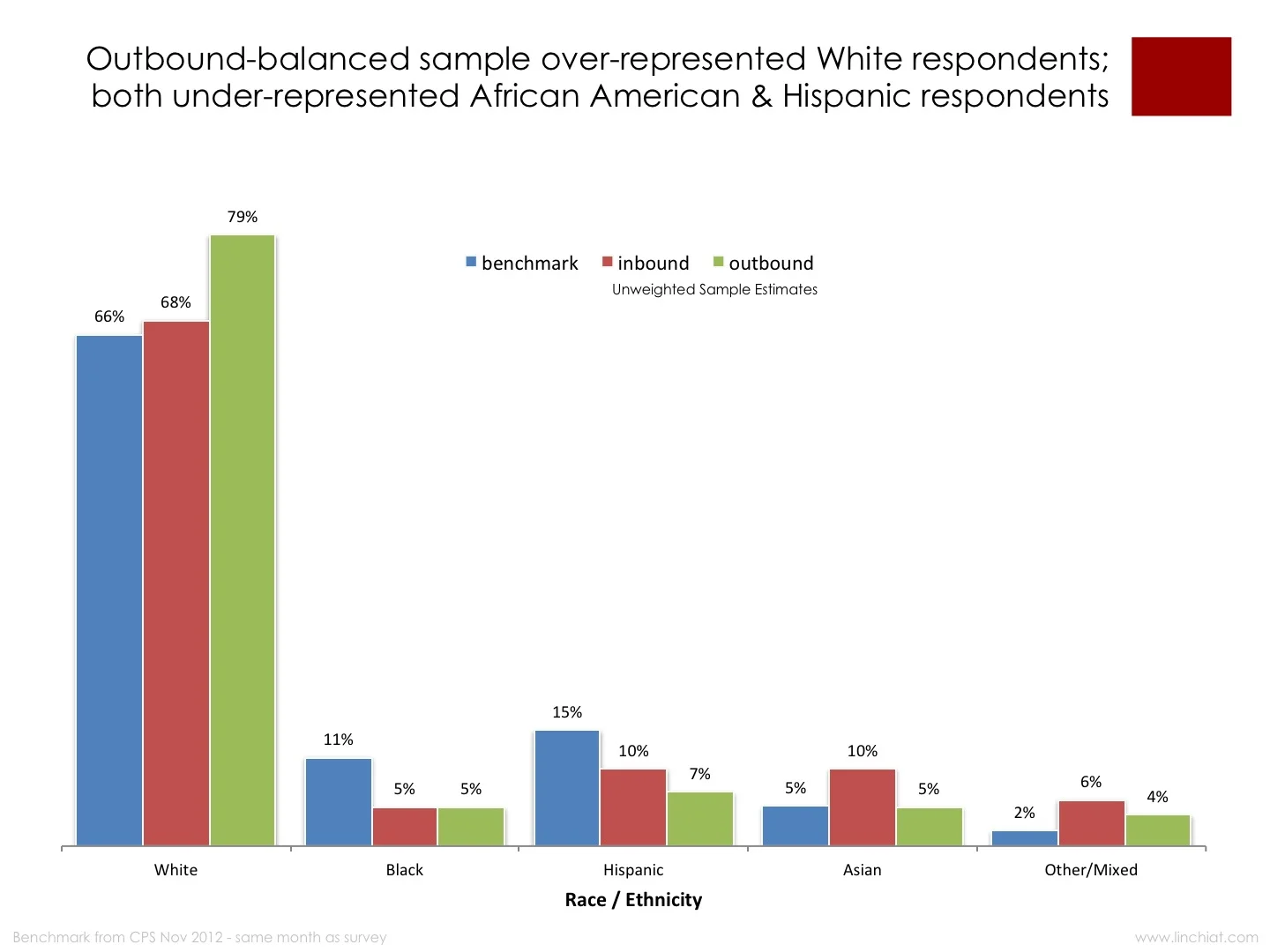

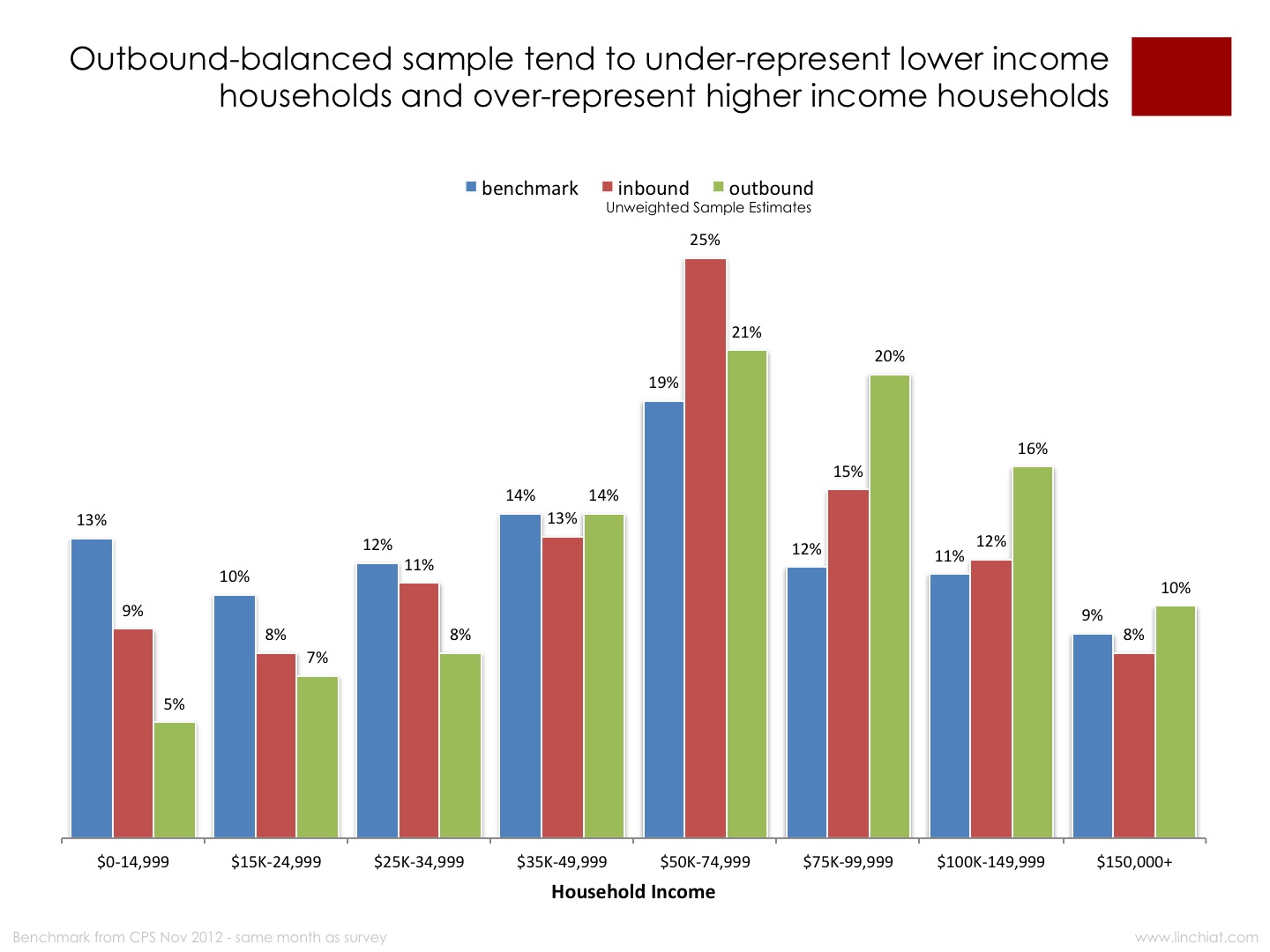

First, we evaluate unweighted estimates from the two survey samples against population parameters on basic demographics released by the U.S. Census Bureau. The charts below reveal sample biases on age, race, and household income.

Next, we weight the two samples with post-stratification rim weights developed from iterative raking along multiple demographic dimensions of age, gender, race/ethnicity, and household income.

Comparisons of sample estimates before vs. after weighting show clear improvements in acccuracy when evaluated against available census benchmarks for home and vehicle ownership, household size, relocation, health insurance status; as well as benchmarks from FDIC on banked vs. unbanked populations.

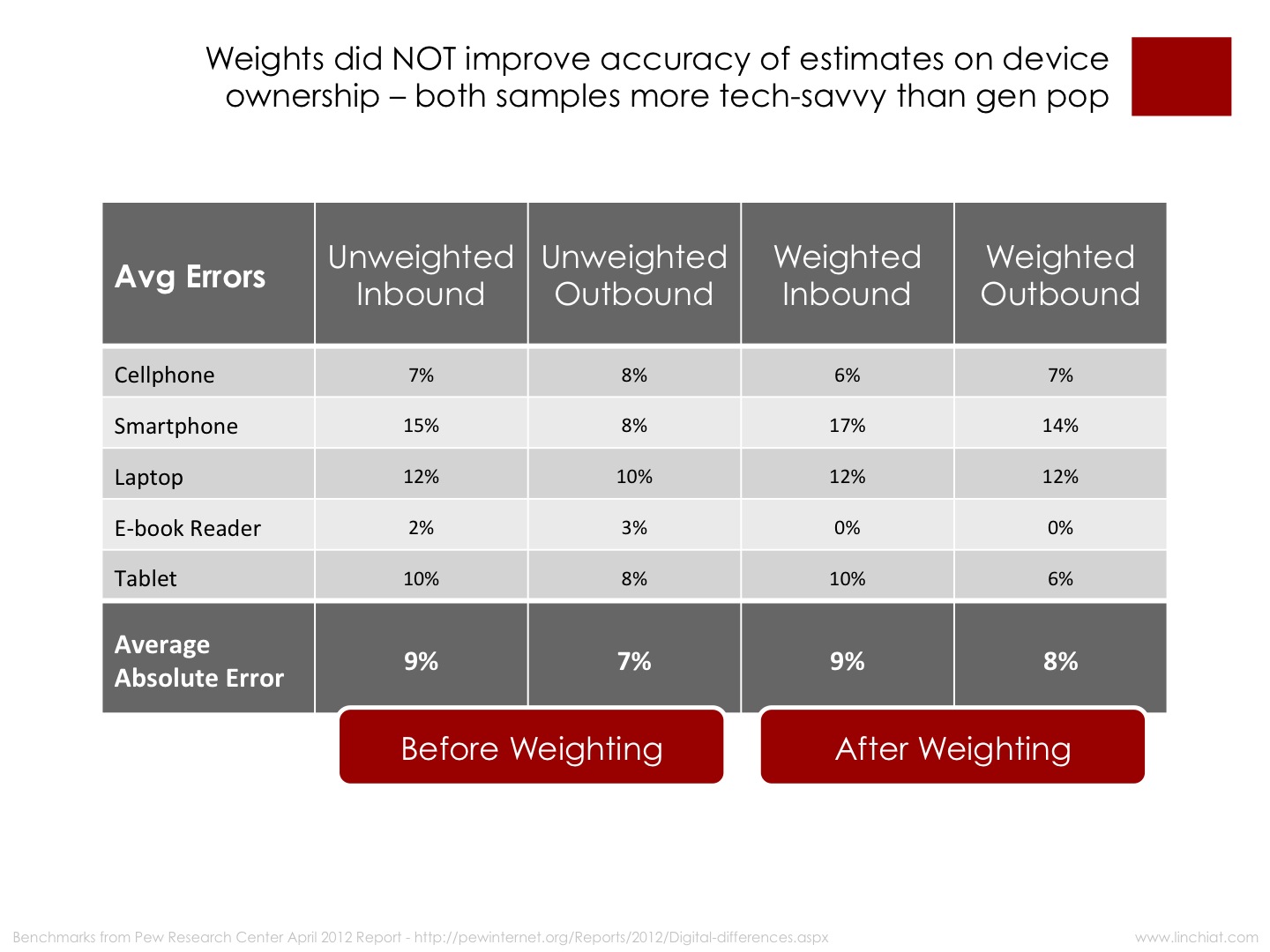

However, rim weights built only on demographics did NOT improve accuracy of estimates on technology device ownership. When compared against similar estimates from the Pew Foundation on mobile and digital divide measures, it is clear that both the inbound and outbound samples are more tech-savvy than the general population.

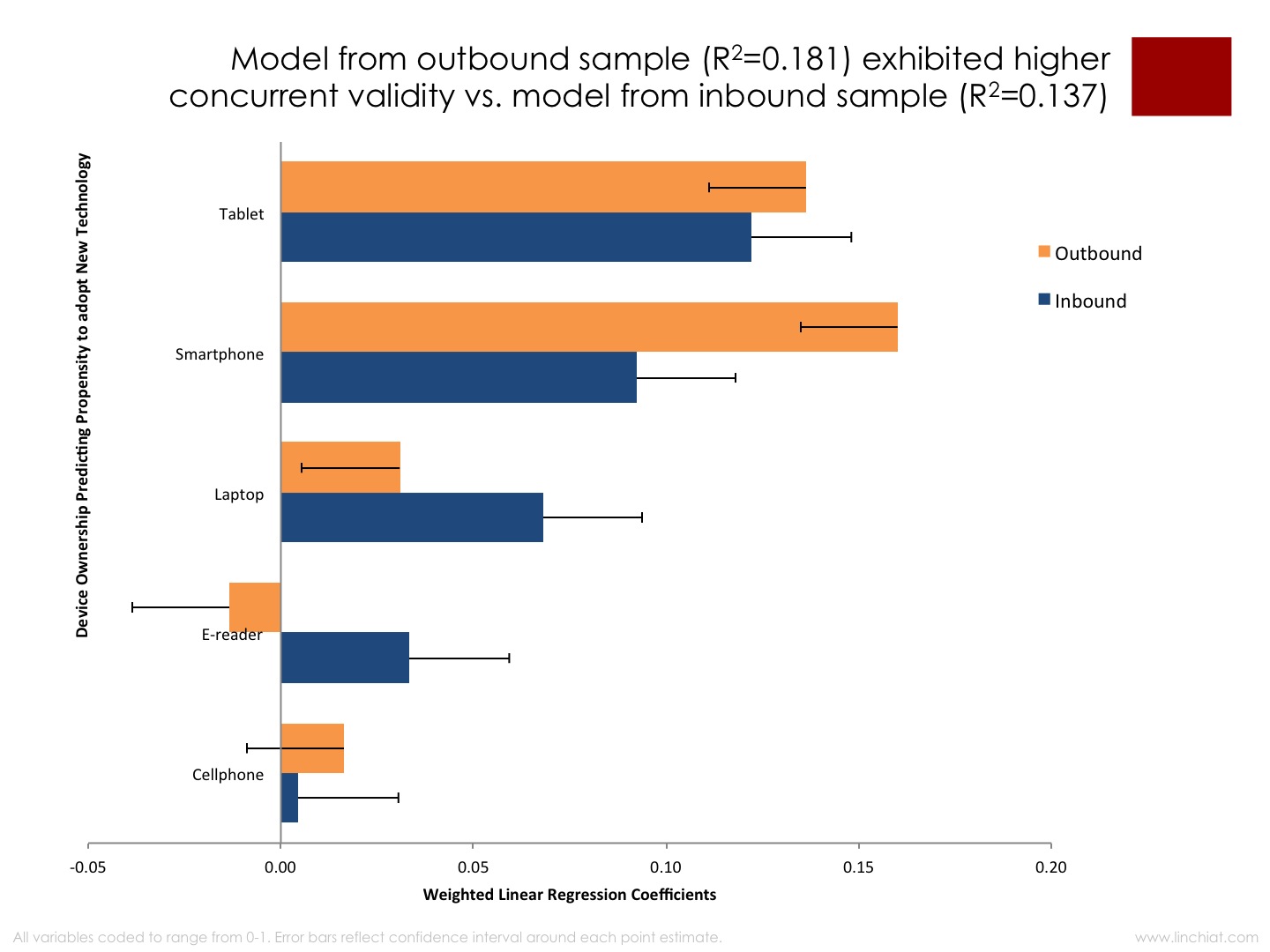

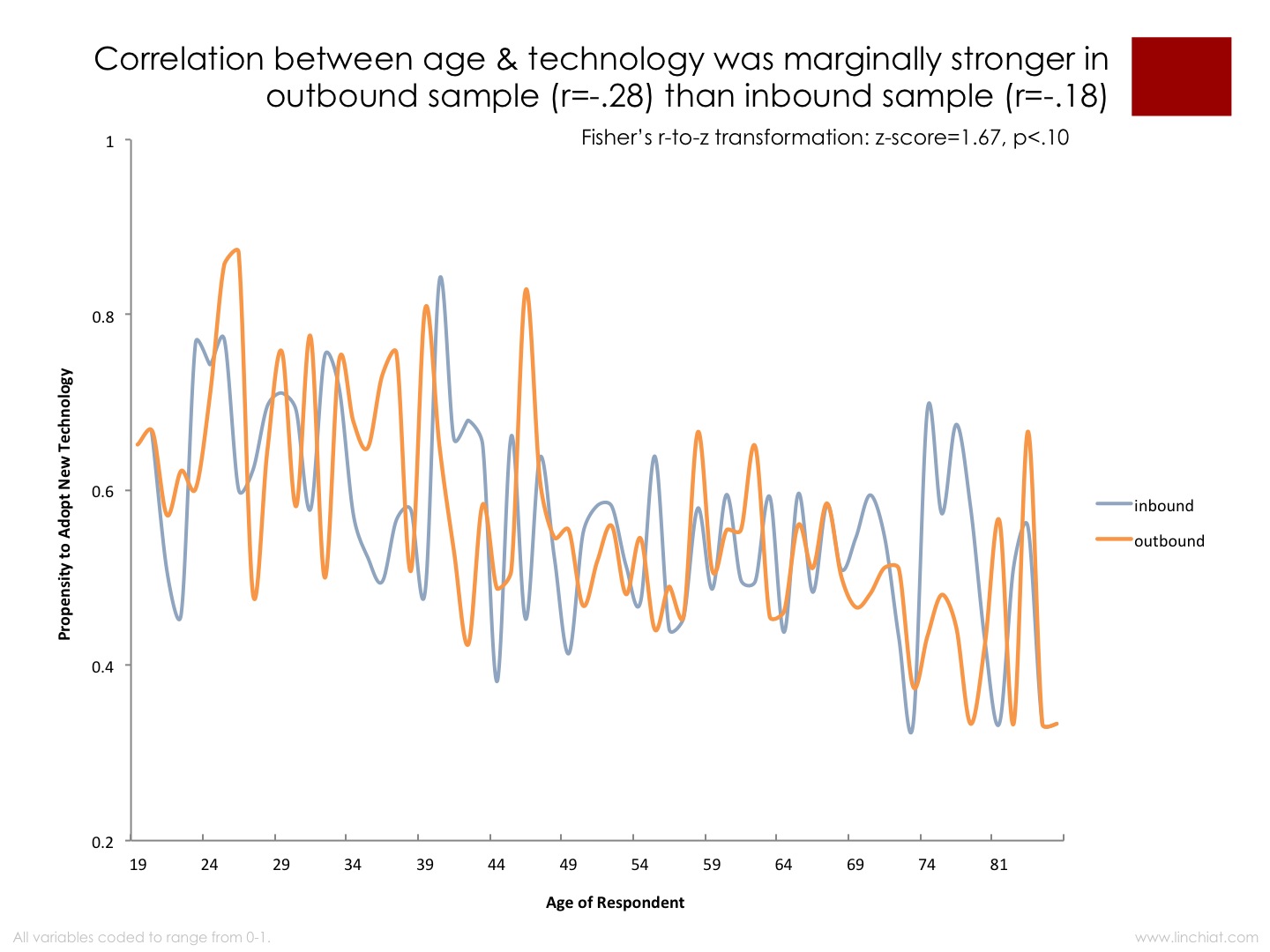

Next, we ran a couple of predictive models on available variables to test the concurrent validity of attitudes and behaviors relating to adoption of technology, as well as to replicate known demographic differences in likelihood to have private health insurance. In both tests, the outbound sample produced findings that reflected higher concurrent validity than the inbound sample.

Summary of Main Findings

- Inbound sample (weighted) performed better on point estimates of available benchmarks

- Outbound sample (weighted) performed better on all tests of concurrent validity

- Despite strict quotas, inbound sample required weighting to produce better estimates

- Rim weights improved estimates of many socio-economic attributes BUT not device ownership

The full conference presentation can be retrieved here <PPT>

Source: Chang, LinChiat and Kavita Jayaraman. 2013. Comparing Outbound vs. Inbound Census-balanced Web Panel Samples. Paper presented at the annual meeting of the European Survey Research Association.